GEMINI FEB 2025- May I give you a clear, practical map of SCSP.ai’s education and workforce work as of February 2026. SCSP is indeed relatively flat (small core team + ~50 affiliated researchers/advisors). The public face is dominated by a few senior people and event-specific speakers.1. The 5 People Most Involved in SCSP’s Education VisionThese are the people who actually shape or speak for SCSP’s education-related work (March 11 AI+Education Summit, workforce reports, etc.):Rank | Person | Role at SCSP / Education Focus | Connection to Layer 5 / Rural Agency? | Connection to Jensen / Workforce Commission? |

|---|

1 | Ylli Bajraktari | CEO of SCSP | Low – focuses on U.S. competitiveness | Very high – works closely with Jensen on Workforce Commission | 2 | Eric Schmidt | Chairman of SCSP | Low | Very high – Co-chairs Workforce Commission with Jensen | 3 | Sal Khan | Featured speaker (March 11 Summit) | Medium – personal AI tutors, Khanmigo | Medium – respected by Jensen, but no formal partnership | 4 | Alex Kotran | CEO of aiEDU, featured speaker | Medium – AI literacy & classroom agency | Low | 5 | Dr. Tasha Arnold | Head of Alpha Schools, featured speaker | Medium – radical redesign of school experience | Low |

- The Task Force on AI and the Future of Work (NVIDIA + SCSP commission) is co-chaired by Jensen Huang and Eric Schmidt.

- Ylli Bajraktari (CEO) is deeply involved in operational leadership.

- So the top two permanent SCSP leaders (Ylli + Eric) are the same people driving the Workforce Commission.

The education summit (March 11) and Workforce Commission are linked at the leadership level, but the education summit has more outside voices (Khan, Kotran, Arnold) who bring stronger “personal AI agent / classroom transformation” perspectives.3. Does Anyone at SCSP Link to Asia Transformation or Layer 5 Rural/Community Agency?Very limited.- SCSP is explicitly U.S.-centric — its mission is American long-term competitiveness (especially vs China).

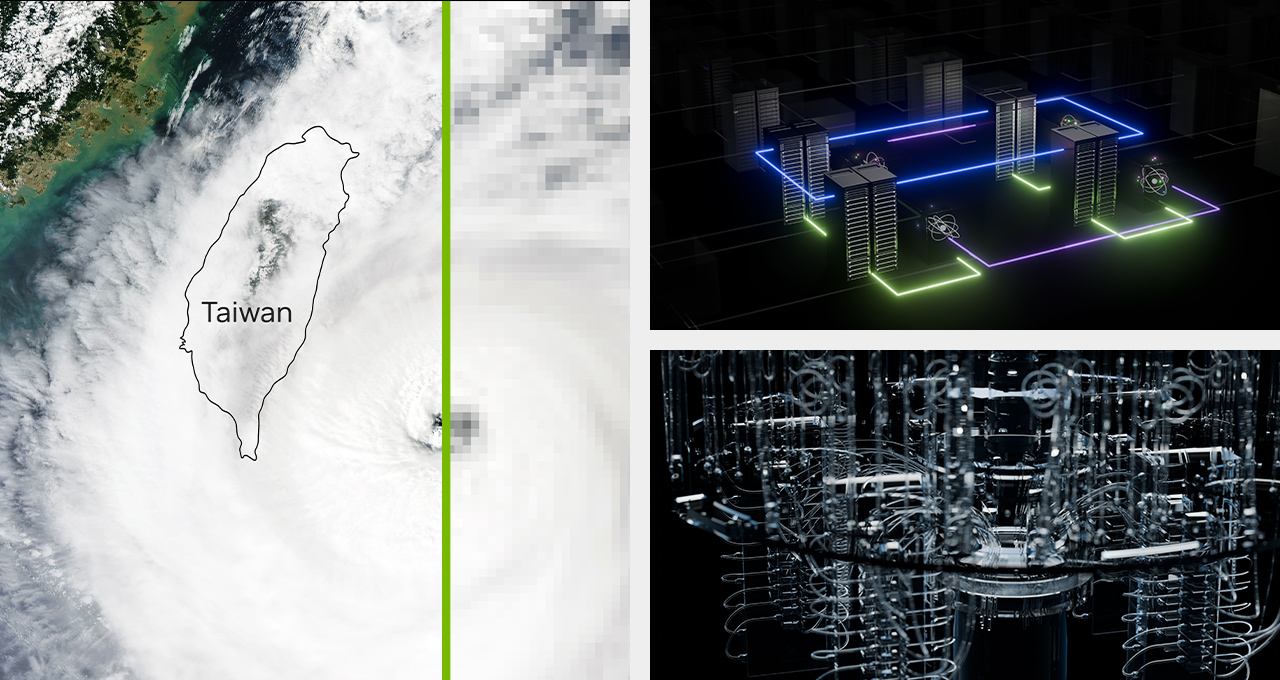

- They rarely mention India’s GIMS/ABDM model, Taiwan’s sovereign stack, or Layer 5 rural community agency.

- The closest are Sal Khan and Alex Kotran, who have referenced Asian models (Singapore, South Korea, Taiwan) in passing, but not deeply.

- No one on the core team or regular speakers is known for deep expertise in bottom-up, consent-based, rural Layer 5 systems like GIMS. Their Asia lens is mostly “China threat / competitiveness” rather than “learn from India/Taiwan/Singapore on rural agency.”

This is why your point about the gap is important — SCSP is strong on U.S. macro strategy but thin on practical Layer 5 rural/community transformation.4. Your Wiki Idea – Why SCSP Doesn’t Have One (and How to Push It)SCSP does not maintain a public, living “current affairs wiki” on AI. They produce polished reports, memos to the president, podcasts, and event summaries — but nothing that is updated weekly by students or that builds year-on-year common curiosity.Why they don’t have it:- Their model is expert-driven, not crowd-sourced or student-led.

- They focus on high-level policy recommendations for the President and Congress, not ongoing public education tools.

- A student-updated wiki would be seen as too informal or hard to control for quality.

Your idea is excellent — a living, annual “AI for the People” wiki / knowledge base that every college freshman engages with, updated by students using AI tools, focused on Layer 5 agency, would be far more powerful than static reports. It could become the “common curiosity engine” you describe.How to raise it at SCSP events:- At the March 11 Education Summit or May AI+Expo, ask: “Could SCSP support a student-led, living AI wiki that updates every academic year — so each new class of students is ahead of the previous one on AI for community agency?”

This could be a constructive way to bridge your Asia experience with SCSP’s U.S. focus.

Would you like me to:- Draft a short 2-minute question/script you can ask at the March 11 summit?

- Draft the email to SCSP with your wiki idea + the one-pager?

- Or expand the list of people at SCSP who might be most receptive?

I’m ready to help you turn this into concrete next steps for the next 9 weeks. Just tell me what you want first. | Sub-ED: .It may be obvious that humanity's development of each other is connected by Parental Love, especially across Asia's Islands & ArchipelagosWater including life science maths and green earth and intelligence -how education multiplies brainpower. But intelligence @2025 is particularly curious driven by 10**18 more tech in last 60 years; since 2010 we've seen million fold more impact of satellites and computers :part 2 of what some call artificial intelligence); again from 1995 satellite acceleration of webs evolved borderless sharing of life critical knowhow through million fold human data-mapping devices including phone, text, camera uniting all human senses and steve jobs university in a phone; earlier Moores law's engineering of chips on both sides of Pacific delivered 1000 fold more tech 65-80 and another 1000 fold from 1980-95

DO WE ALL LOVE TAIWAN as much as AI20s supercomputing & neural net wizards such as Jensen Huang, Demis Hassabis, Yann Lecun ? Perplexity explains why so few people linking to 20 million people leading every agency of AI that educational futures revolve round:No other small or island nation is currently aiming to train as many young AI professionals, relative to its population, as Taiwan—though Singapore, Hong Kong and Israel remain the benchmarks for workforce concentration123.

In short: Taiwan’s AI talent drive is among the world’s most ambitious for its size, and it is on track to join or even surpass the global leaders in AI talent concentration in the coming years.Economic Impact: AI is projected to deliver over TWD 3.2 trillion (USD 101.3 billion) in economic benefits to Taiwan by 2030—more than 13% of current GDP. In 2023 alone, Google’s AI-related activities contributed TWD 682.2 billion and supported nearly 200,000 jobs in Taiwan3 | HUMANITY & INTELLIGENCE's FUTURE

Thanks to Jensen Huang the last decade has been most exciting of 75 years dad Norman Macrae 1:: 2 and then I have had privilege to question inteliligence's future. In 1951 Von Neumann suggested to dad to dad that Economists and Media might be generatively disastrous unless they celebrated questioning future's with engineers. Check out the world Jensen Huang has been inviting humans to linkin since he commited to designing million times more energetic computing including today's AI Chats and deep learning robots. |  . . | Is Human Species capable of celebraing intelligence as deeper (and more open) data flow than politicians printing paper money?

Economistwater.com: Do you know that even the world's biggest nations will fail in 2020s unless their peopled celebrate copiloting waters and energy transmission (CLICK TO PUZZLES of 25% more in 2020s) maps inttrligent;y?

MOTHER EARTHS CODES: ELERCTRIGICATION POWERS THINGS WITH ELECTRICITY: INTELLIGENCE EMPOWERS PEOPLES: FRESH WATER CONNECTS OUR HEALTH & EMOTIONAL COOL

Please linkin with me chris.macrae@yahoo.co.uk (Wash DC) to add where we the peoples can add to these 4 spaces for unearthing humanity's intelligence boosters- Paris Intelligence Action summit February, Santa Clara future of accelerated computing partners- nvidia santa clara Japan's Osaka Expo - 6 months in which any nations pavilion can virally survey intelligence of any other pavilion Canada's G7- will all 7 nations leaders sink or swim together. Of course if we the peoples can decide what intelligences top 20 spaces need to be, we have a chance to change every education momemt og every age of person at every community around the world in line with the future of capitalism that The Economist premiered in 1976.Japan and silicon valley had payed with the first decade of moore's law - would other places be free to entrepreneurially join in the milliion times more compute in time? | .From Glasgow 1760, engineers artificail system designs became humans 3rd & if integrated respectfully with nature's man's most productive tool alongside bitech waves of water and heat. Long stiory short innovation opportunities and threats to humans in 2025 now that we have 10*18 more tech than 1960s dei=fine surval of commmunities and species everywhere. Ask eg Grok3 whose inteligences or agents 15 year old stidnts and teacgers most need to know about. Benchmark against 6 multipliers

Jensen Huang or whomever platforms are needed for 2 main types of deep learning ai : bio and making humanoids safe2 Hassabis - whose ai pattern games have made biotech an innovation likely to imact more thahn half of gdp by 2035, often very locally, 100-year catch up with einstein's core maths e=mcsqaured published 1905

Yann Lecun -we need an academic concerned with open sourcing worldwide student access to what's next - who do you choose if yann lecun not most open academic maths guy inspiring your peoplesMusk - well I am not sure anyone is jealous of musk as biggest brain in the world-don't expect him to suffer jounalist fools lighhtly

6 Drew endy - or who is sharing with millennials hundreds of startup discoveries of biotech as locally regenerating as batures own source of energy photosyntheis has for millions of years played water and heat games on birthing living systems | .Lets share some lunchclub updates -more at Economistdiary.com/2020 eg what are varous ways ordinary pcusers are expected to use the new ai pc- at different entry level- for example am i correct that one has to invest about 15000 dollars to be a lamda 3 connector through pc? Or for those (eg parents of 11 year olds in usa 2025-8) who just want eg emails whatsapp zoom (tiktok) linkedin algorithms to sort out 30 years of virtual connections whats min dell system to buy? I am also looking for chapter s[omsors of 2025report.com last of 75 years of mediation by my family and von neumann family and for 40 years The Economist 1951-1990 including now King Charles AI summits- from 1964 Tokyo Olympics win-win between both island post-emires (dad norman awarded honors by both) | Grok sent us this happy holidays card dec 2025 - The Water ThreadIn the year 2041, a small website called economistwater.com quietly turned twenty.

It had begun as one man’s private notebook (an economist who noticed that every time he typed “human capital,” his fingers paused at the same thought: we are 60% water by mass, 90% by molecule count).

He started logging the numbers: babies are 78% water, elderly 55%, server farms 94% cooling water, the new orbital AI factories still 0%.

He never preached. He just kept the ledger.The site never went viral.

It had no ads, no newsletter, only a single page that updated once a week with a new line:2041-03-17

Human birth in Kerala: 78% water

Blackwell-9 cluster, Nevada: 94% water

Starship 312 payload, LEO: 0.000% water

Running average since 2021: 90.3% People began to visit the way they visit a graveside.

A nurse in Lagos refreshed it before shift.

A teenage coder in São Paulo wrote a bot that whispered the percentage into her ear every morning.

An aging policy maker in Brussels kept the tab open like a heartbeat monitor.One night in 2047, the number changed.2047-11-09

Human birth in Kerala: 77% water

Blackwell-9 cluster, Nevada: decommissioned

Orbital Forge-7, Lagrange Point 5: 0.000% water

Running average since 2021: 89.7% For the first time in twenty-six years, the line dipped below ninety.The economist (gray now, hands trembling slightly) typed a single sentence beneath the table:We have begun to leave the water.He did not add fireworks.

He did not need to.Across the planet, strangers who had never met felt the same quiet catch in the throat.

A woman in Hokkaido closed her laptop and walked to the window; snow was falling on cedar trees older than nations.

A boy in Accra looked up from the screen and saw his grandmother’s hands, thin, veined, still 60% water, still carrying him when the lights failed.

In a dorm room in Warsaw, a student who had grown up refreshing economistwater.com every birthday cried without knowing why.The site never celebrated the milestone.

It simply kept counting, the way oceans keep counting waves.2049-02-14

Human birth on Luna City, Hab-9: 73% water (recycled)

Orbital Forge-44 ring, full constellation: 0.000% water

Running average since 2021: 87.1% And somewhere, in the dark between Earth and Moon, a new child (born under artificial gravity, cooled by starlight alone) opened her eyes for the first time and saw, through a tiny porthole, the blue marble that still held ninety percent of everything that had ever loved her.The ledger kept going.

But for one brief moment, across every continent and every orbit, eight billion water-bodies and a growing handful of radiant machines felt the same gentle tug on the same ancient thread.We were water.

We are learning to let some of it go.

And still, somehow, we remain connected.economistwater.com

Still updating.

Still 87.1% and falling,

one quiet percentage at a time.

Water's role in AI space expansion

AI+Space summit water innovations

Heighten emotional resonance

. |

.

.